RandamizedSearchCVで複数のモデルを評価する

行4ではデータを75対25に分割しています。

行7-65では評価するモデルを定義しています。

各モデルのパラメータ値が数値のときは不連続ではなく、連続したパラメータ値を指定します。

ここではPythonの「list(range())」で連続したパラメータ値を生成しています。

パラメータ値がstr型のときは、全てのパラメータを指定します。

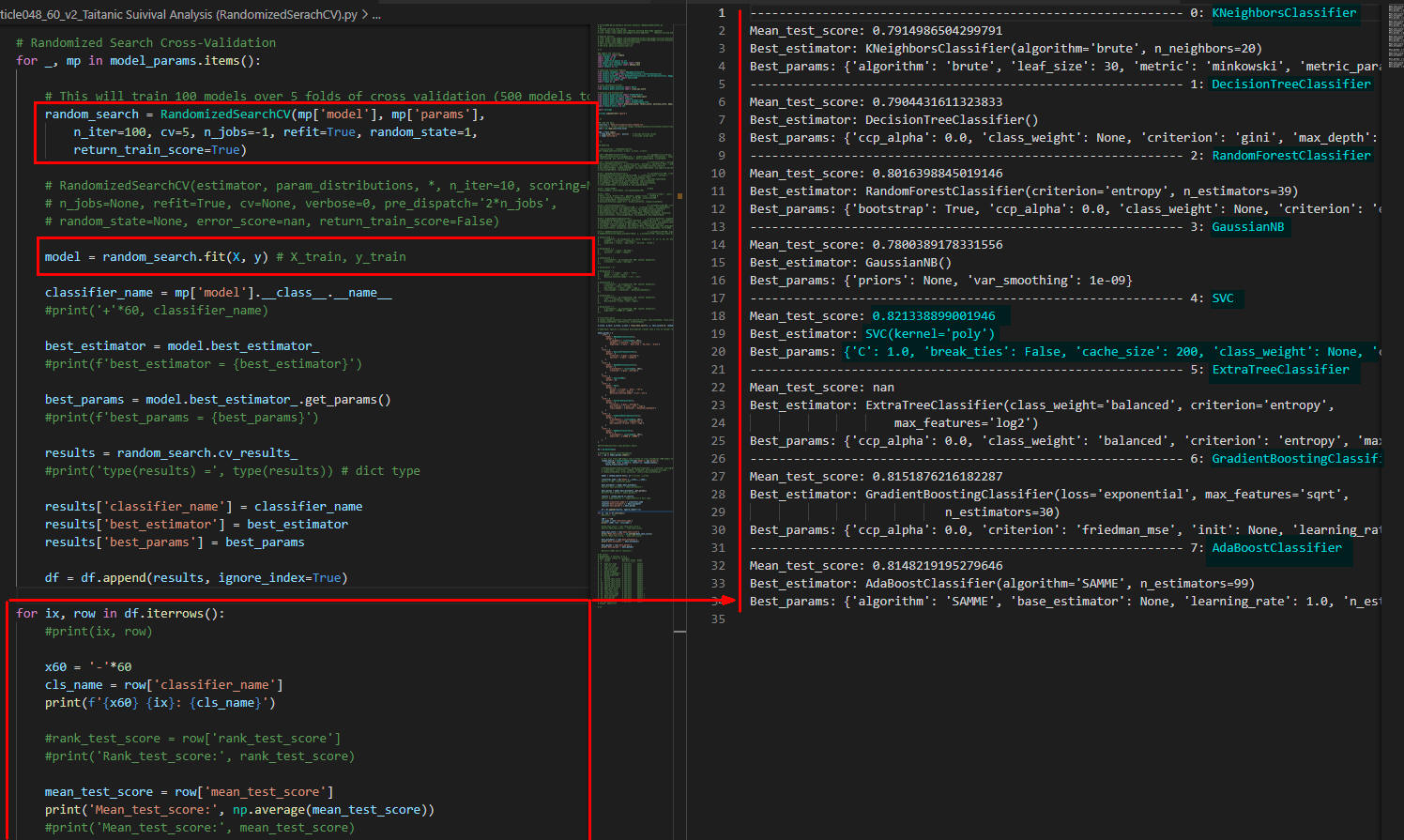

行70-88ではRandomizedSearchCV()とfit()で各モデルの学習・評価を行っています。

RandomizedSearchCV()の引数に「n_iter=100, cv=5」を指定しているので各モデルとも合計500回学習・評価させます。

行90-102では各モデルの評価を表示しています。

### Modeling

# Train-test Split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=0)

# Important: Specify a continuous distribution (rather than a list of values) for any continous parameters ★

model_params = {

'clf0': {

'model': KNeighborsClassifier(),

'params': {

'n_neighbors': list(range(1, 101)),

'weights': ['uniform', 'distance'],

'algorithm': ['auto', 'ball_tree', 'kd_tree', 'brute']

}

},

'clf1': {

'model': DecisionTreeClassifier(),

'params': {

'criterion': ['gini','entropy'],

'splitter': ['best','random']

}

},

'clf2': {

'model': RandomForestClassifier(),

'params': {

'n_estimators': list(range(1, 101)),

'criterion': ['gini','entropy']

}

},

'clf3': {

'model': GaussianNB(),

'params': {}

},

'clf4': {

'model': SVC(),

'params': {

'kernel': ['linear', 'poly', 'rbf'],

'gamma': ['scale','auto'],

'decision_function_shape': ['ovr','ovo']

}

},

'clf5': {

'model': ExtraTreeClassifier(),

'params': {

'criterion': ['gini','entropy'],

'max_features': ['auto','sqrt','log2'],

'class_weight': ['balanced', 'balanced_subsample']

}

},

'clf6': {

'model': GradientBoostingClassifier(),

'params': {

'n_estimators': list(range(1, 101)),

'loss' : ['deviance','exponential'],

'max_features':['auto','sqrt','log2']

}

},

'clf7': {

'model': AdaBoostClassifier(),

'params': {

'n_estimators': list(range(1, 101)),

'algorithm': ['SAMME.R','SAMME']

}

}

}

df = pd.DataFrame()

# Randomized Search Cross-Validation

for _, mp in model_params.items():

# This will train 100 models over 5 folds of cross validation (500 models total)

random_search = RandomizedSearchCV(mp['model'], mp['params'],

n_iter=100, cv=5, n_jobs=-1, refit=True, random_state=1,

return_train_score=True)

model = random_search.fit(X, y)

classifier_name = mp['model'].__class__.__name__

best_estimator = model.best_estimator_

best_params = model.best_estimator_.get_params()

results = random_search.cv_results_

results['classifier_name'] = classifier_name

results['best_estimator'] = best_estimator

results['best_params'] = best_params

df = df.append(results, ignore_index=True)

for ix, row in df.iterrows():

x60 = '-'*60

cls_name = row['classifier_name']

print(f'{x60} {ix}: {cls_name}')

mean_test_score = row['mean_test_score']

print('Mean_test_score:', np.average(mean_test_score))

best_estimator = row['best_estimator']

print('Best_estimator:', best_estimator)

best_params = row['best_params']

print('Best_params:', best_params)

図3

図3は実行結果です。

VSCのインタラクティブ・ウィンドウに評価結果が表示されています。

SVCが「0.821 (82%)」でハイ・スコアになっています。

そして、SVCのベスト・パラメータは「Best_params: {'C': 1.0, 'break_ties': False, 'cache_size': 200, 'class_weight': None, 'coef0': 0.0, 'decision_function_shape': 'ovr', 'degree': 3, 'gamma': 'scale', 'kernel': 'poly', 'max_iter': -1, 'probability': False, 'random_state': None, 'shrinking': True, 'tol': 0.001, 'verbose': False}」

のようになっています。

図3

図3は実行結果です。

VSCのインタラクティブ・ウィンドウに評価結果が表示されています。

SVCが「0.821 (82%)」でハイ・スコアになっています。

そして、SVCのベスト・パラメータは「Best_params: {'C': 1.0, 'break_ties': False, 'cache_size': 200, 'class_weight': None, 'coef0': 0.0, 'decision_function_shape': 'ovr', 'degree': 3, 'gamma': 'scale', 'kernel': 'poly', 'max_iter': -1, 'probability': False, 'random_state': None, 'shrinking': True, 'tol': 0.001, 'verbose': False}」

のようになっています。

ここで解説したコードをまとめて掲載

最後にここで解説したすべてのコードをまとめて掲載しましたので参考にしてください。

### Import the libraries

from functools import reduce

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from pandas.core.reshape.reshape import stack

from scipy.stats.morestats import median_test

import seaborn as sns

# Importing Classifier Modules

from sklearn.neighbors import KNeighborsClassifier

from sklearn.tree import DecisionTreeClassifier, ExtraTreeClassifier

from sklearn.ensemble import RandomForestClassifier, ExtraTreesClassifier, BaggingClassifier, AdaBoostClassifier, GradientBoostingClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.svm import SVC

import numpy as np

# Cross Validation(k-fold)

from sklearn.model_selection import KFold

from sklearn.model_selection import cross_val_score

# Pipeline and GridSearchCV

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from sklearn.pipeline import Pipeline

from sklearn.model_selection import GridSearchCV

from sklearn.model_selection import RandomizedSearchCV

from sklearn.metrics import precision_score, recall_score, accuracy_score, make_scorer, f1_score, roc_auc_score

import sklearn.metrics as skm

import warnings

warnings.simplefilter('ignore')

# %%

### Load the data

train_file = 'data/csv/titanic/train_cleaned.csv'

#train_file = 'https://money-or-ikigai.com/menu/python/article/data/titanic/train_cleaned.csv'

train = pd.read_csv(train_file)

temp = train.copy()

X = temp.drop('Survived', axis=1) # Exclude Survived column

y = temp['Survived'] # Survived column only

# %%

### Modeling

# Classification - randomzed_search()

#def random_search(X_train, X_test, y_train, y_test):

#clf0 = KNeighborsClassifier() # n_neighbors=[3,5,10,15], weights={'uniform', 'distance'}, algorithm={'auto', 'ball_tree', 'kd_tree', 'brute'}

# KNeighborsClassifier(n_neighbors=5, *, weights={‘uniform’, ‘distance’} , algorithm={‘auto’, ‘ball_tree’, ‘kd_tree’, ‘brute’},

# leaf_size=30, p=2, metric='minkowski', metric_params=None, n_jobs=None)

#clf1 = DecisionTreeClassifier() # criterion={'gini','entropy'}, splitter={'best','random'}

# DecisionTreeClassifier(*, criterion={'gini','entropy'}, splitter={'best','random'}, max_depth=None,

# min_samples_split=2, min_samples_leaf=1, min_weight_fraction_leaf=0.0,

# max_features=None, random_state=None, max_leaf_nodes=None, min_impurity_decrease=0.0,

# class_weight=None, ccp_alpha=0.0)

#clf2 = RandomForestClassifier() # n_estimators=1-100, criterion={'gini','entropy'}

# RandomForestClassifier(n_estimators=100, *, criterion={'gini','entropy'},

# max_depth=None, min_samples_split=2, min_samples_leaf=1,

# min_weight_fraction_leaf=0.0, max_features='auto', max_leaf_nodes=None,

# min_impurity_decrease=0.0, bootstrap=True, oob_score=False,

# n_jobs=None, random_state=None, verbose=0, warm_start=False,

# class_weight=None, ccp_alpha=0.0, max_samples=None)

#clf3 = GaussianNB() # None

# GaussianNB(*, priors=None, var_smoothing=1e-09)

#clf4 = SVC() # kernel={'linear', 'poly', 'rbf', 'sigmoid', 'precomputed'}, gamma={'scale','auto'}, decision_function_shape={'ovr','ovo'}

# SVC(*, C=1.0, kernel='rbf', degree=3, gamma='scale', coef0=0.0,

# shrinking=True, probability=False, tol=0.001, cache_size=200,

# class_weight=None, verbose=False, max_iter=- 1,

# decision_function_shape='ovr', break_ties=False, random_state=None)

#clf5 = ExtraTreesClassifier() # n_estimators=10-100, criterion={'gini','entropy'}, max_features={'auto','sqrt','log2'}, class_weight={'balanced', 'balanced_subsample'}

# ExtraTreesClassifier(n_estimators=100, *, criterion='gini', max_depth=None,

# min_samples_split=2, min_samples_leaf=1, min_weight_fraction_leaf=0.0,

# max_features='auto', max_leaf_nodes=None, min_impurity_decrease=0.0,

# bootstrap=False, oob_score=False, n_jobs=None, random_state=None, verbose=0,

# warm_start=False, class_weight=None, ccp_alpha=0.0, max_samples=None)

#clf6 = GradientBoostingClassifier() # loss={'deviance','exponential'}, n_estimators=10-100

# GradientBoostingClassifier(*, loss='deviance', learning_rate=0.1, n_estimators=100,

# subsample=1.0, criterion='friedman_mse', min_samples_split=2, min_samples_leaf=1,

# min_weight_fraction_leaf=0.0, max_depth=3, min_impurity_decrease=0.0,

# init=None, random_state=None, max_features=None, verbose=0, max_leaf_nodes=None,

# warm_start=False, validation_fraction=0.1, n_iter_no_change=None, tol=0.0001, ccp_alpha=0.0)

#clf7 = AdaBoostClassifier() # n_estimators=10-100, algorithm={'SAMME.R','SAMME'}

# AdaBoostClassifier(base_estimator=None, *, n_estimators=50, learning_rate=1.0, algorithm='SAMME.R', random_state=None)

# Train-test Split

# sklearn.model_selection.train_test_split(# *arrays, test_size=None, train_size=None,

# random_state=None, shuffle=True, stratify=None)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=0)

# Important: Specify a continuous distribution (rather than a list of values) for any continous parameters ★

model_params = {

'clf0': {

'model': KNeighborsClassifier(),

'params': {

'n_neighbors': list(range(1, 101)),

'weights': ['uniform', 'distance'],

'algorithm': ['auto', 'ball_tree', 'kd_tree', 'brute']

}

},

'clf1': {

'model': DecisionTreeClassifier(),

'params': {

'criterion': ['gini','entropy'],

'splitter': ['best','random']

}

},

'clf2': {

'model': RandomForestClassifier(),

'params': {

'n_estimators': list(range(1, 101)),

'criterion': ['gini','entropy']

}

},

'clf3': {

'model': GaussianNB(),

'params': {}

},

'clf4': {

'model': SVC(),

'params': {

'kernel': ['linear', 'poly', 'rbf'],

'gamma': ['scale','auto'],

'decision_function_shape': ['ovr','ovo']

}

},

'clf5': {

'model': ExtraTreeClassifier(),

'params': {

'criterion': ['gini','entropy'],

'max_features': ['auto','sqrt','log2'],

'class_weight': ['balanced', 'balanced_subsample']

}

},

'clf6': {

'model': GradientBoostingClassifier(),

'params': {

'n_estimators': list(range(1, 101)),

'loss' : ['deviance','exponential'],

'max_features':['auto','sqrt','log2']

}

},

'clf7': {

'model': AdaBoostClassifier(),

'params': {

'n_estimators': list(range(1, 101)),

'algorithm': ['SAMME.R','SAMME']

}

}

}

#ExtraTreeClassifier().get_params().keys()

df = pd.DataFrame()

# Randomized Search Cross-Validation

for _, mp in model_params.items():

# This will train 100 models over 5 folds of cross validation (500 models total)

random_search = RandomizedSearchCV(mp['model'], mp['params'],

n_iter=100, cv=5, n_jobs=-1, refit=True, random_state=1,

return_train_score=True)

# RandomizedSearchCV(estimator, param_distributions, *, n_iter=10, scoring=None,

# n_jobs=None, refit=True, cv=None, verbose=0, pre_dispatch='2*n_jobs',

# random_state=None, error_score=nan, return_train_score=False)

model = random_search.fit(X, y) # X_train, y_train

classifier_name = mp['model'].__class__.__name__

#print('+'*60, classifier_name)

best_estimator = model.best_estimator_

#print(f'best_estimator = {best_estimator}')

best_params = model.best_estimator_.get_params()

#print(f'best_params = {best_params}')

results = random_search.cv_results_

#print('type(results) =', type(results)) # dict type

results['classifier_name'] = classifier_name

results['best_estimator'] = best_estimator

results['best_params'] = best_params

df = df.append(results, ignore_index=True)

for ix, row in df.iterrows():

#print(ix, row)

x60 = '-'*60

cls_name = row['classifier_name']

print(f'{x60} {ix}: {cls_name}')

#rank_test_score = row['rank_test_score']

#print('Rank_test_score:', rank_test_score)

mean_test_score = row['mean_test_score']

print('Mean_test_score:', np.average(mean_test_score))

#print('Mean_test_score:', mean_test_score)

best_estimator = row['best_estimator']

print('Best_estimator:', best_estimator)

best_params = row['best_params']

print('Best_params:', best_params)

#print(f'{x60} end of row({ix})')

# df.info()